When you build reliable automations with n8n, you often need a place to store data — not only credentials and workflow metadata but also files, cached items, and lookup records. This guide shows practical, secure steps to connect n8n to Redis (fast key-value/cache), Google Cloud Storage (GCS) for object files, and Supabase for app-friendly storage and Postgres data. You’ll get step-by-step setup, importable test flows, and simple troubleshooting tips.

Quick note: n8n uses SQLite by default for local setups but supports external databases and external binary storage via environment variables. Use external storage for production workloads.

Pro Tip: Start working on n8n with Quick Alternative with Free Credits: If you don’t want to manage hosting yourself, you can also use:

- Free $5 every month with quick GitHub verification.

- 50% off yearly Hobby & Pro plans (until Oct-31).

- No credit card needed, setup in under 1 minute.

What / Why / Who this guide is for

- What: How to connect n8n to common storage backends (Redis, GCS, Supabase) and run test flows.

- Why: External storage improves reliability (files survive container restarts), performance (Redis cache), and scalability.

- Who: n8n hobbyists, devs, and no-code makers who want production-ready storage patterns.

Quick comparison: Redis, GCS, Supabase

| Use case | Best for | Cost/notes |

| Redis | Cache, ephemeral queues, session store | Low-latency; not for large files. Use managed Redis for persistence. |

| Google Cloud Storage | Large files, backups, media | Object storage with lifecycle rules & versioning. |

| Supabase Storage | App files, CDN, small/medium assets | Easy auth + Postgres integration; good for web apps. |

Common configuration patterns

Environment variables are the safest way to provide credentials to n8n. n8n also supports file-based overrides (append _FILE to variable names), which is perfect for Docker or Kubernetes secrets. Pro tip: If you need a walkthrough on n8n credentials, see our n8n credentials & setup guide

Example .env (local dev)

# Database

DB_TYPE=postgresdb

DB_POSTGRESDB_HOST=postgres.example.com

DB_POSTGRESDB_PORT=5432

DB_POSTGRESDB_DATABASE=n8n

DB_POSTGRESDB_USER=n8n

DB_POSTGRESDB_PASSWORD_FILE=/run/secrets/db_password

# External storage (example)

N8N_EXTERNAL_BINARY_DATA_STORAGE=gcs

N8N_EXTERNAL_GCS_BUCKET=my-n8n-bucket

GOOGLE_APPLICATION_CREDENTIALS=/run/secrets/gcs_service_account.json

# Redis (if using as cache)

REDIS_HOST=redis.example.com

REDIS_PORT=6379

REDIS_PASSWORD_FILE=/run/secrets/redis_password

Best practice: Keep secrets out of Git. Use Docker secrets, cloud secret managers (AWS Secrets Manager, Google Secret Manager), or Kubernetes Secrets with the _FILE pattern. n8n docs

Redis — cache, queue, and light DB

When to pick Redis

Use Redis when you need very fast reads/writes, ephemeral queues, rate limiting, or session data. Not for large files. Managed Redis providers offer free tiers and simple dashboards.

n8n Storage Database Setup – Quick steps (create Redis and connect)

1. Go to https://redis.io/ and Click “Try Redis”

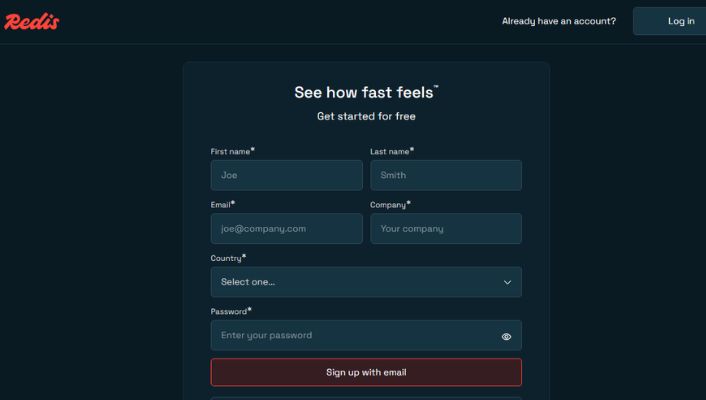

2. Create an account

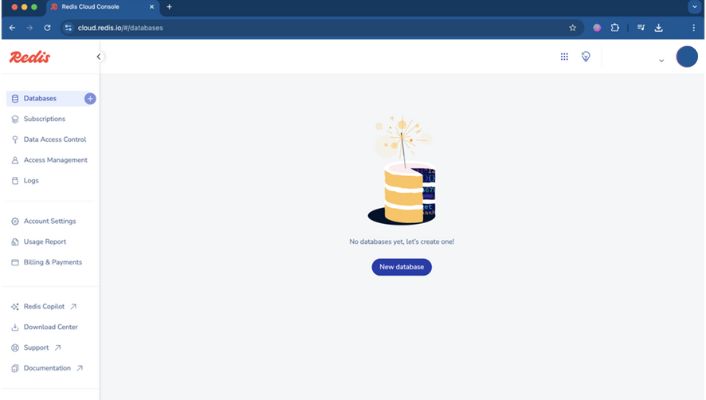

3. Log in and click “New database”

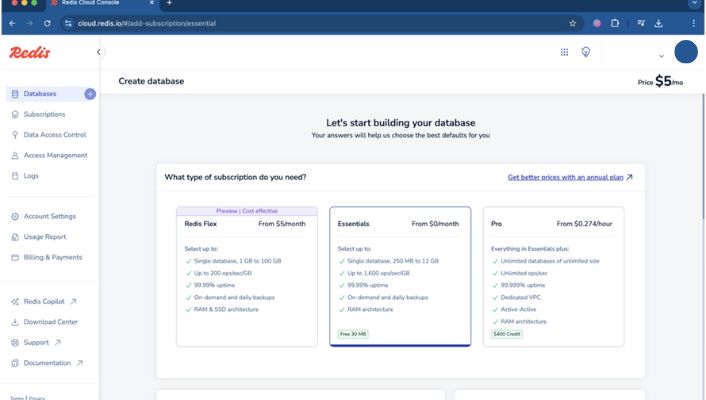

4. Select “Essentials”.

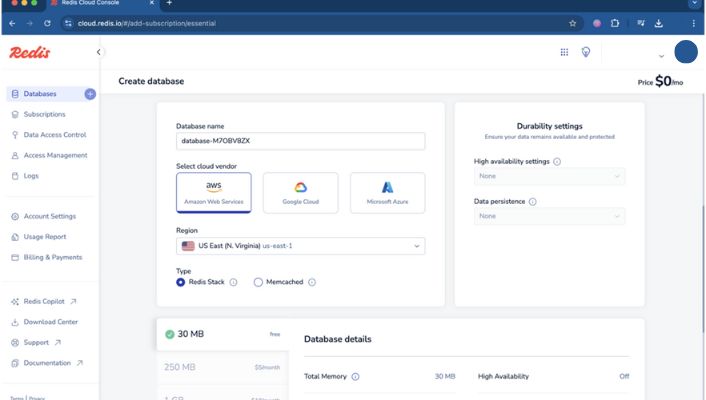

5. Enter a Database name, choose any cloud vendor, and pick the nearest Region.

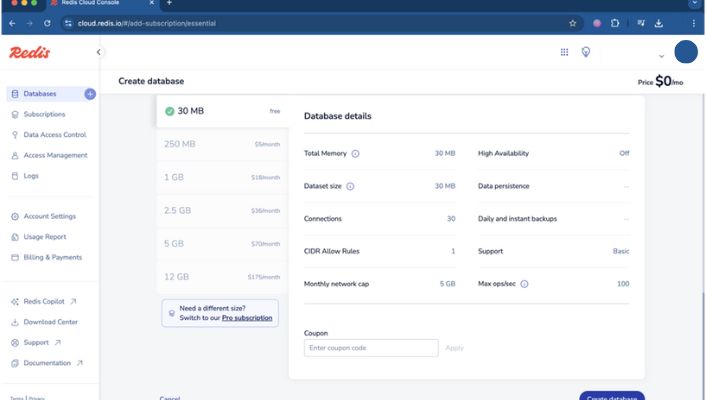

6. Choose the “30 MB Free Plan” and click “Create database”.

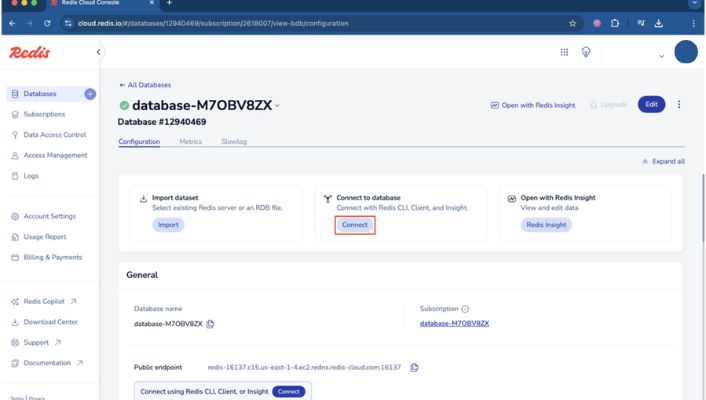

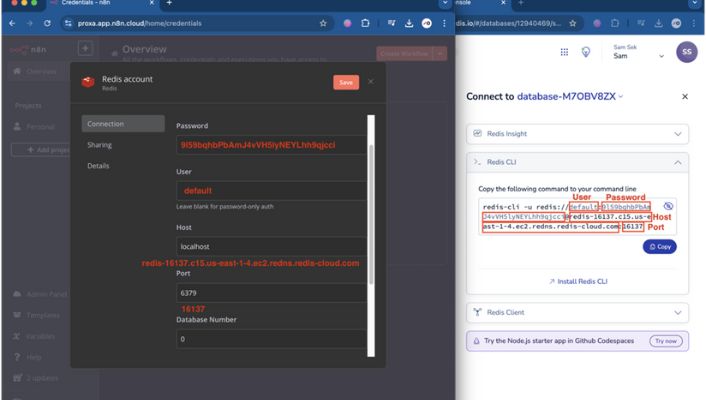

7. Click “Connect to database” → “Connect”.

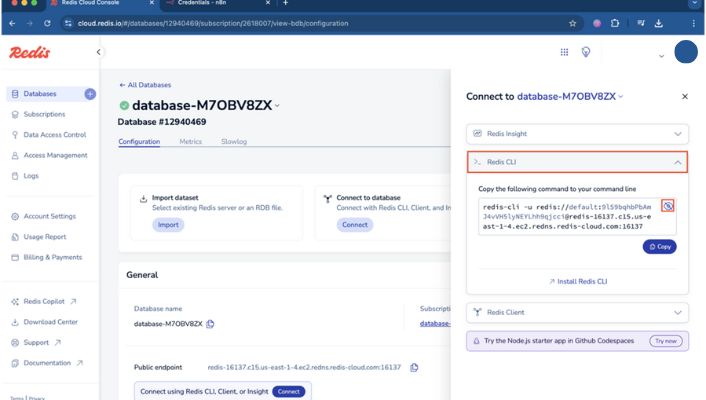

8. Open “Redis CLI” and click the eye icon (or “View”) to reveal connection details.

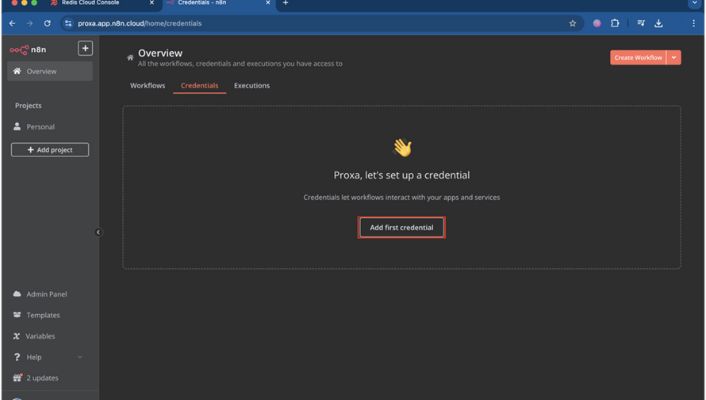

9. In your n8n editor go to “Credentials” → “Add credential”.

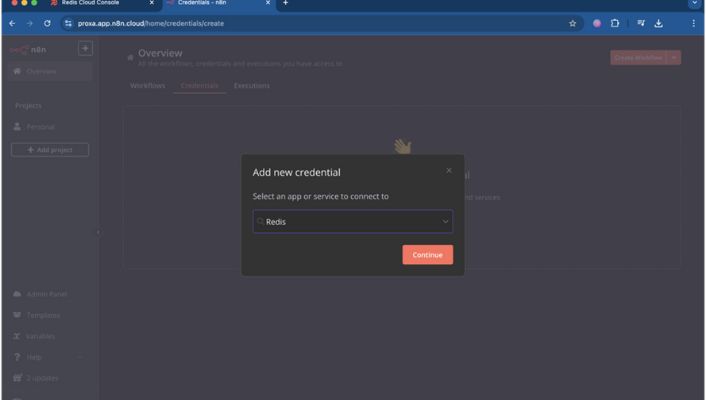

10. In “Search for app” type “Redis” and click “Continue”.

11. Map the fields Password, User, Host, and Port, then click “Save”.

Example n8n workflow (Redis SET → GET) — importable JSON

Import this JSON into n8n (Editor → Import), then edit credentials to match your Redis instance.

{

“name”: “Redis SetGet Example”,

“nodes”: [

{

“name”: “Manual Trigger”,

“type”: “n8n-nodes-base.manualTrigger”,

“typeVersion”: 1,

“position”: [250, 200]

},

{

“name”: “Redis SET”,

“type”: “n8n-nodes-base.redis”,

“typeVersion”: 1,

“position”: [500, 200],

“parameters”: {

“operation”: “set”,

“key”: “n8n_test_key”,

“value”: “Hello from n8n”,

“ttl”: 3600

},

“credentials”: {

“redis”: {

“id”: “your-redis-credential-id”,

“name”: “Redis”

}

}

},

{

“name”: “Redis GET”,

“type”: “n8n-nodes-base.redis”,

“typeVersion”: 1,

“position”: [750, 200],

“parameters”: {

“operation”: “get”,

“key”: “n8n_test_key”

},

“credentials”: {

“redis”: {

“id”: “your-redis-credential-id”,

“name”: “Redis”

}

}

}

],

“connections”: {

“Manual Trigger”: { “main”: [[ { “node”: “Redis SET”, “type”: “main”, “index”: 0 } ]] },

“Redis SET”: { “main”: [[ { “node”: “Redis GET”, “type”: “main”, “index”: 0 } ]] }

}

}

Troubleshooting Redis

- Connection errors: check firewall/VPC rules.

- Auth errors: verify username/password; try connecting with redis-cli first.

- Persistence: enable AOF/RDB or use your provider’s snapshot/backup features. Docs

Google Cloud Storage (GCS) — file and binary storage

When to pick GCS

Choose GCS for large assets: Images, backups, logs, and binary objects. GCS supports lifecycle rules, versioning, and regional buckets. GSC Docs

n8n Storage Database Setup – Quick steps: create service account and bucket

- Create a service account by visiting -> https://cloud.google.com/iam/docs/service-accounts

- Grant minimal permissions needed (for testing, Storage Object Admin is fine; tighten later).

- Create a JSON key for the service account and store it securely (don’t commit to source control).

- Create a bucket and note the name with this page -> https://cloud.google.com/storage/docs/creating-buckets

Configure n8n to use GCS

Store the service account JSON in a secure path inside the container or use the _FILE pattern with Docker secrets: GOOGLE_APPLICATION_CREDENTIALS=/run/secrets/gcs_service_account.json

Set environment variables in n8n:

N8N_EXTERNAL_BINARY_DATA_STORAGE=gcs

N8N_EXTERNAL_GCS_BUCKET=my-n8n-bucket

GOOGLE_APPLICATION_CREDENTIALS=/run/secrets/gcs_service_account.json

Example n8n workflow (Upload file to GCS)

Import and set your credentials.

{

“name”: “GCS Upload Example”,

“nodes”: [

{ “name”: “Manual Trigger”, “type”: “n8n-nodes-base.manualTrigger”, “typeVersion”: 1, “position”:[250,200] },

{

“name”: “Set Binary Data”,

“type”: “n8n-nodes-base.set”,

“typeVersion”: 1,

“position”:[450,200],

“parameters”: {

“values”: {

“binary”: [

{ “key”: “data”, “value”: “={{Buffer.from(‘My sample file content’).toString(‘base64’)}}” }

]

},

“options”: {}

}

},

{

“name”: “Google Cloud Storage”,

“type”: “n8n-nodes-base.googleCloudStorage”,

“typeVersion”: 1,

“position”:[700,200],

“parameters”: {

“operation”: “upload”,

“bucket”: “my-n8n-bucket”,

“binaryPropertyName”: “data”,

“fileName”: “sample.txt”

},

“credentials”: {

“googleCloud”: {

“id”: “gcs-credential-id”,

“name”: “GCS Service Account”

}

}

}

],

“connections”: {

“Manual Trigger”: { “main”:[[{“node”:”Set Binary Data”,”type”:”main”,”index”:0}]] },

“Set Binary Data”: { “main”:[[{“node”:”Google Cloud Storage”,”type”:”main”,”index”:0}]] }

}

}

Troubleshooting GCS uploads

- Permission errors usually mean the service account role is too limited. Try Storage Object Admin for testing, then narrow.

- Bucket not found: confirm bucket name and region. Check GCS docs from main website

- n8n configuration reference

Supabase — app-friendly storage and Postgres metadata

When to pick Supabase

Use Supabase when you need both file storage (CDN-enabled) and database metadata (Postgres) with simple auth. Good for web apps and prototypes.

n8n Storage Database Setup – Quick steps of Supabase setup

- Create a Supabase project and open Storage → create a bucket

- Create an API key (service role key) for server-side access; use anon/public keys for client usage with RLS.

- In n8n, configure the Supabase node with supabaseUrl and supabaseKey in credentials.

Example flow idea (high level)

- Trigger: HTTP/Webhook receives an uploaded file or URL.

- Action: Use Supabase Storage node to upload the file to a bucket.

- Action: Insert a metadata row into a Supabase table (filename, URL, uploaded_by).

- Optional: Need OCR before upload? Try our Image to Text Tool

Test flows and retry strategies

- How to run test flows: Import the JSON examples into n8n Editor (Menu → Import). Map your credentials (Redis, GCS, Supabase). Run manual triggers or test executions.

- Retry strategies: use node-level retries and error workflows (Error Trigger → notification). For HTTP/Storage nodes, check response codes and apply retries with backoff. For critical writes, add idempotency keys to avoid duplicates.

Security, backups, and monitoring

- Rotate keys regularly (every 60–90 days) and use least-privilege roles.

- Backups: Redis — enable snapshotting/AOF or managed backups; GCS — enable versioning and lifecycle rules; Postgres — scheduled backups. Redis persistence overview

- Monitoring: use provider logs plus n8n workflow logs/alerts on failures.

Common mistakes & fixes

- Hardcoding credentials in nodes → switch to env vars or secret files

- Wrong bucket/region → double-check exact bucket name and region in Google Cloud Console.

- No retry/alerts → add error workflows and notifications.

Conclusion & next steps

You now have a clear path for adding storage to n8n: pick Redis for fast ephemeral data, GCS for large files, and Supabase for app-centric storage + Postgres. Import the example flows, add credentials, and test. After that, set up secrets management, add retry/error handling, and enable backups for production.

FAQ

Q1: Do I need a paid plan to use Redis/GCS/Supabase with n8n?

No — all three providers offer free tiers suitable for testing. Free tiers have limits (storage size, request quotas). For production use choose a paid tier that fits your reliability needs and set up backups and monitoring.

Q2: Where should I store service account JSON files?

Store them outside your codebase, ideally in Docker secrets, Kubernetes secrets, or cloud secret managers. Use the _FILE pattern with n8n so the secret is mounted into the container and referenced as a file path in env vars.

Q3: Can n8n use Redis as the primary database?

No — Redis is great for caches, queues, and ephemeral data, but n8n requires a proper database like Postgres for workflow metadata in production. Use Redis alongside a supported DB for best results.

Q4: How do I test file uploads to GCS from n8n?

Import the GCS Upload Example JSON, set your GCS credentials, run the Manual Trigger, then check the bucket in Google Cloud Console for the uploaded file. If you get permission errors, verify the service account role and bucket name.

Q5: What retry strategy should I add for storage writes?

Add node-level retries for intermittent errors, use idempotency keys to avoid duplicates, and create an error workflow that sends alerts (email/Slack) on repeated failures. For large file uploads, retry in chunks or use resumable upload APIs where supported.

Q6: Can n8n store files in Google Cloud Storage?

Yes — use the Google Cloud Storage node and authenticate with a service account JSON. Set N8N_EXTERNAL_BINARY_DATA_STORAGE=gcs if you want n8n to store binary data externally.

Q7: Is Redis persistent for n8n data?

Redis can be persistent if you enable snapshotting/AOF or use a managed provider with backups. For durable long-term storage, prefer GCS for files and Postgres for metadata.

Q8: Does Supabase work with n8n?

Yes — n8n has a Supabase node to upload files and work with Postgres in the same project.

Q9: How to keep service account keys secure?

Use Docker/Kubernetes secrets or a cloud secret manager, and the _FILE env var pattern to avoid plaintext in env vars.

Q10: Should I use external storage for a small hobby project?

For simple hobby projects, local storage is fine, but external storage increases reliability and is better for containers/cloud hosting.